Induction Heads

I was recently working on a project to try to understand how induction heads form in transformers. This is almost entirely derived from the work in A Mathematical Framework for Transformer Circuits and its sequel In-context Learning and Induction Heads. As a quick recap, induction heads are heads that look for a previous example of the current token, then copy the following token. This allows the model to repeat arbitrary sequences of tokens. A simple way to form this head is as a two-head circuit split across two layers. The head in layer 1 attends to the previous token and copies the value of the current token. The head in layer 2 composes with the head in layer 1 to attend to the previous instance of the current token, and copy the relevant output (the next token). It turns out that this form of induction circuit is also the kind that seems to be quickest to form in the toy models I’ve played with as well.

Measuring Composition

A good portion of A Mathematical Framework is devoted to investigating how this composition happens. It describes three kinds of composition, Q-Composition, K-Composition, and V-Composition, when , , and respectively read in a subspace affected by a previous head. It goes on to describe a way of measuring the amount of composition. In each case, the measure is of the form:

where is the Frobenius norm. The intent of this measure is to capture the amount that reads in information from the output of .

For Q-Composition and . K- and V-composition have similar structures, with correspondingly different matrices.

Scores in Practice

In an already-trained model, looking at the relative composition scores of heads does a reasonable job of explaining which heads are composing with which. In a few toy models I saw induction heads have K-composition scores of around 0.2-0.3 with their previous-token head, while non-composed heads had scores under 0.1. The paper suggests subtracting off the amount of composition expected in two random matrices, which in these models were about 0.1. This spread is large enough to be able to visualize composition patterns easily in a heatmap.

This is already a bit unsatisfying to me, though. I really want heads whose only job is induction to have a composition score of 1! I put that aside for a bit though because I was especially interested in seeing how the K-composition evolved during training while the induction head formed. It would be very satisfying to see a bunch of near-zero K-composition until around when induction heads form, then a bump up to its final value.

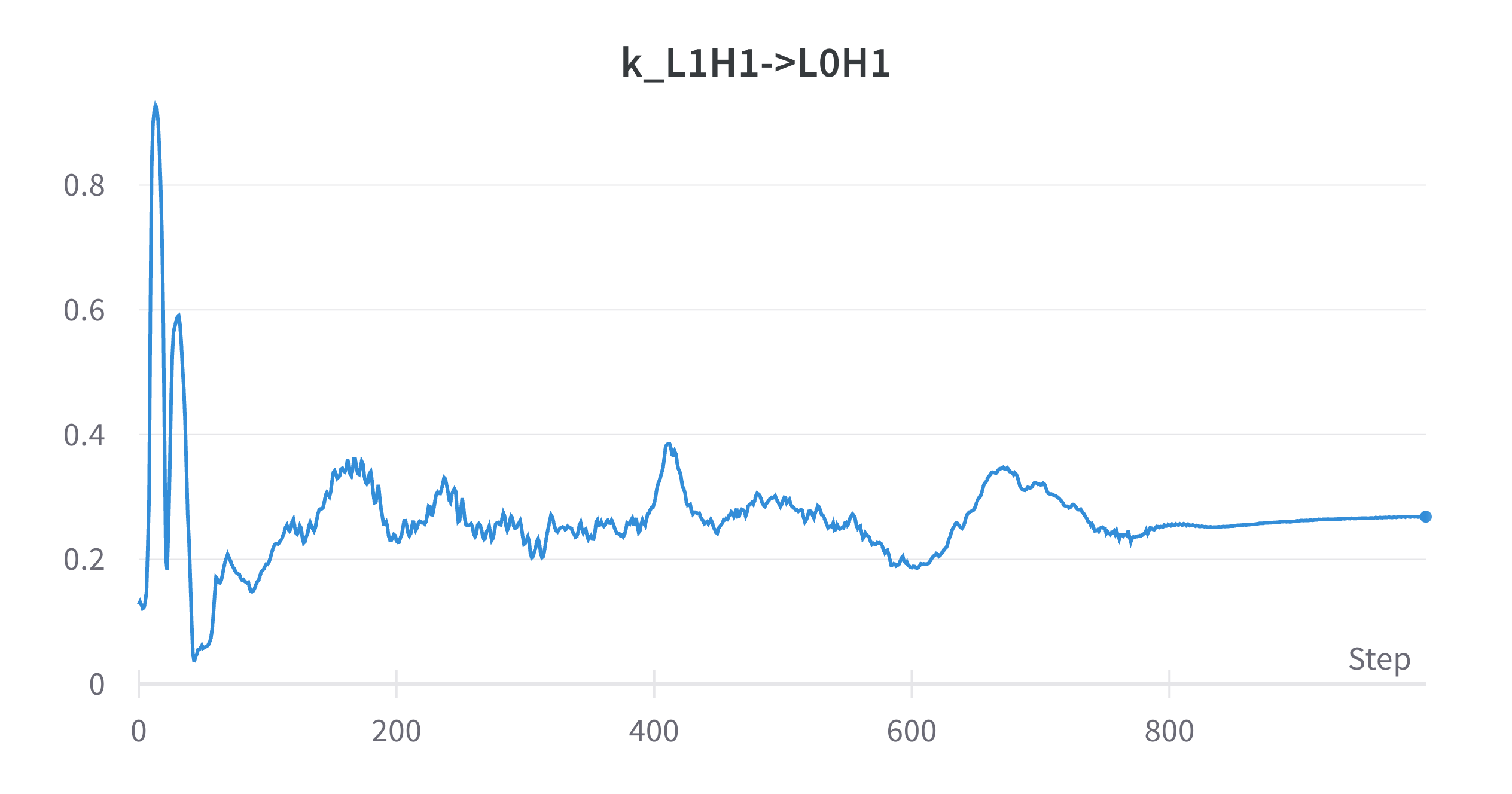

This is where I started going a bit nuts trying to understand things. Because when I plotted this graph, I got

What??

For context, induction heads form between steps 700-800 in this training run.

OK, so it’s somewhat unsatisfying if induction heads don’t have K-composition scores near one. It’s much more unsatisfying if they don’t have scores near one and random stuff that happens early on in training does get a score of one.

So I started trying to understand this measure a bit better.

Properties of this Measure

The Frobenius norm is sub-multiplicative, so this measure will take on values from zero to one. That feels good and proper. Great start.

Next, what does it mean to “read/write to the same space”? My intuition is that surely whatever we mean, the identity matrix reads and writes to the same space, and more generally, and do.

Nope! For a -dimensional identity matrix, , and , so this measure comes out to . Extra gross! This thing isn’t even dimension-invariant!

So how do you even get this thing to one then? This measures one when is a rank-1 matrix of constant rows, and is a rank-1 matrix of constant columns.

By the way, other matrix norms do have the property that this measure will come out to one for the identity matrix, but they seem to all have other weird properties, like only measuring the maximum direction in which the two matrices are operating.

Interpretation

My real motivation for writing this is to bait someone who is much better at linear algebra than me to think about this and explain what feels funny. In the interest of such baiting, I’ll now attempt to badly explain what it feels like is going on. Your job is to get mad at how ignorant this explanation is and then tweet at me.

It seems like the fundamental disconnect is that we want to be measuring the amount that can write into an arbitrary space for to read, but presently we’re measuring the amount that has to write into space for to read. So two orthonormal bases can compose with each other in arbitrary subspaces, but two rank-1 matrices have no choice but do so.

Is that maddening enough? I hope so! Please come hurl abuse my way as long as it has math in it.